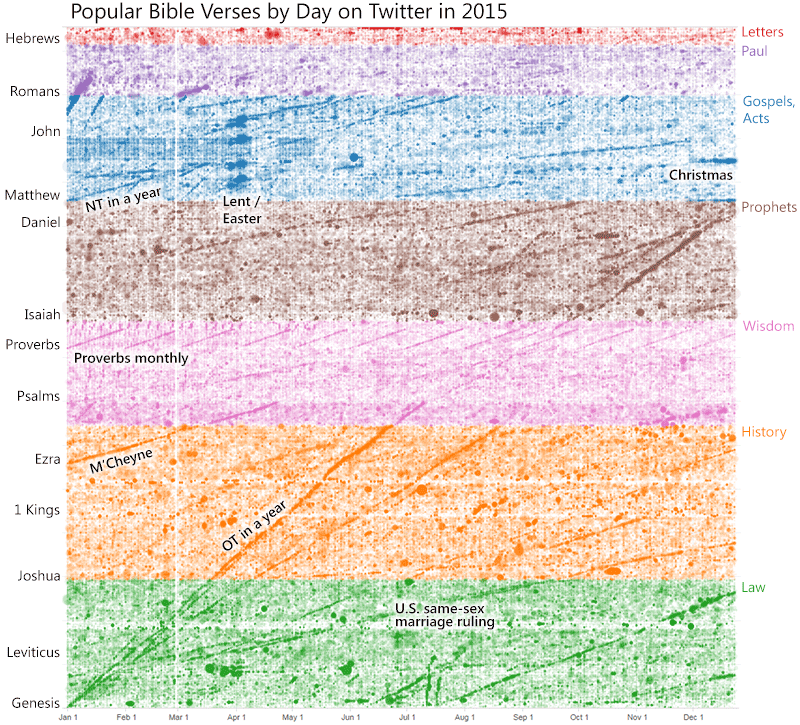

Here’s a quick look at the 40 million Bible verses shared on Twitter in 2015.

Through the Year

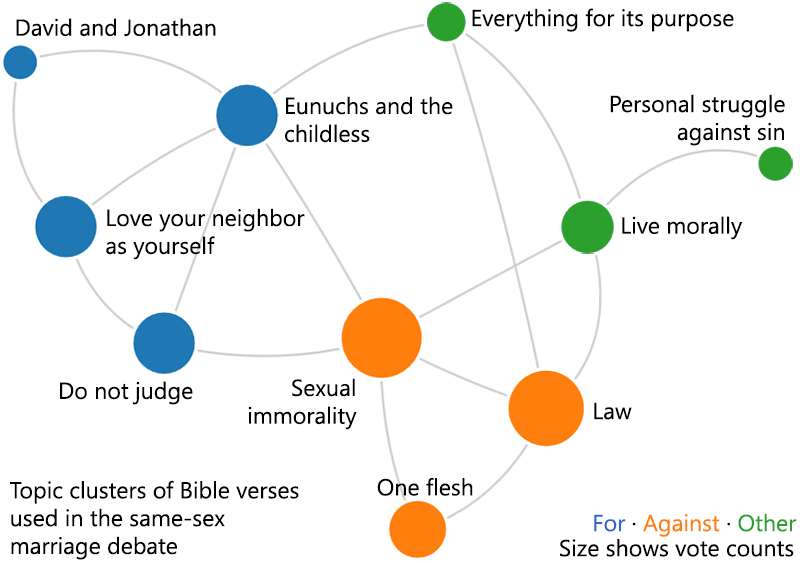

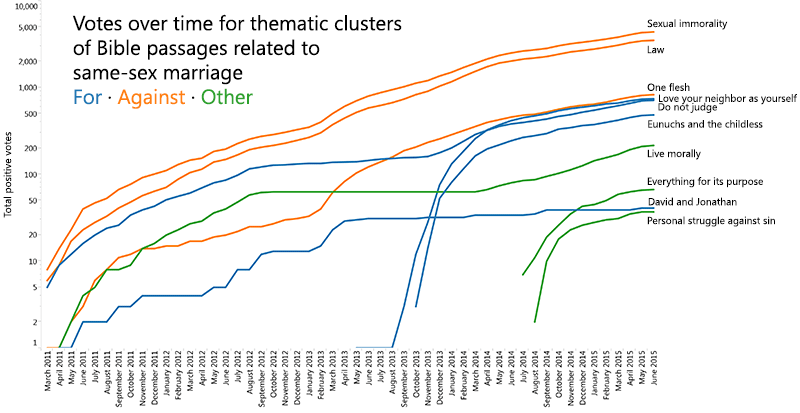

As with last year, in 2015 we see the prevalence of through-the-Bible-in-a-year plans that feature certain verses on particular days. Also note the cluster in Leviticus and Deuteronomy after the U.S. Supreme Court ruling on same-sex marriage.

Most-Popular Verses

| Rank | Verse | Tweets | Text |

|---|---|---|---|

| 1. | Phil 4:13 | 262,150 | I can do all things through him who strengthens me. |

| 2. | John 3:16 | 206,480 | For God so loved the world, that he gave his only Son, that whoever believes in him should not perish but have eternal life. |

| 3. | Jer 29:11 | 127,355 | For I know the plans I have for you, declares the LORD, plans for welfare and not for evil, to give you a future and a hope. |

| 4. | Rom 8:18 | 115,719 | For I consider that the sufferings of this present time are not worth comparing with the glory that is to be revealed to us. |

| 5. | Rom 8:28 | 115,588 | And we know that for those who love God all things work together for good, for those who are called according to his purpose. |

| 6. | Prov 3:5 | 110,216 | Trust in the LORD with all your heart, and do not lean on your own understanding. |

| 7. | 1Pet 5:7 | 98,974 | Casting all your anxieties on him, because he cares for you. |

| 8. | Rom 5:8 | 97,841 | But God shows his love for us in that while we were still sinners, Christ died for us. |

| 9. | 2Tim 1:7 | 88,924 | For God gave us a spirit not of fear but of power and love and self-control. |

| 10. | Ps 56:3 | 86,998 | When I am afraid, I put my trust in you. |

You can also download a text file (411 KB) with the complete list of verses and how many times they were tweeted in 2015.

Top Verse Sharers

Here are the top non-spam (as far as I can tell) accounts and how many Bible verse tweets they tweeted this year (including retweets):

- JohnPiper (109,589 tweets)

- Franklin_Graham (94,341 tweets)

- DangeRussWilson (83,176 tweets)

- JosephPrince (76,031 tweets)

- siwon407 (31,141 tweets)

- DaveRamsey (29,690 tweets)

- TimTebow (29,212 tweets)

- mainedcm (28,687 tweets)

- JoyceMeyer (25,381 tweets)

- BishopJakes (24,243 tweets)

- jamesmacdonald (23,753 tweets)

- camerondallas (22,499 tweets)

- JordanElizabeth (21,735 tweets)

- AllyBrooke (21,108 tweets)

- Kevinwoo91 (19,735 tweets)

- ToriKelly (19,716 tweets)

- ihopkc (16,920 tweets)

- revraycollins (16,211 tweets)

- InTouchMin (15,888 tweets)

- ToddAdkins (13,825 tweets)

Most-Retweeted Tweets

Here are the year’s most-retweeted tweets with Bible verses in them. Here you’ll find tweets from various pop stars, Vine personality Cameron Dallas, someone named Cory Machado (I’m unclear why he has a million followers), a fan account for boxer Manny Pacquiao (not the boxer himself), and Tim Tebow. I don’t understand Korean pop star’s Siwon Choi’s tweet at all.

“And now these three remain: faith, hope and love. But the greatest of these is love.” 1Corinthians 13:13 ❤️ #LoveTrumpsAll

Psalms 16:11 Walk on his path and your life will be abundant

I’m grateful, today is a great day! Don’t brag about yourself let others praise you. -Proverbs 27:2 @BlakeMallen @RyanBlair @ViSalus

“The light shines in the darkness, and the darkness can never extinguish it” -John 1:5

I call on the LORD in my distress, and he answers me. Psalm 120:1

Be kind to one another, tender hearted, forgiving each other, just as God in Christ also has forgiven you. Ephesians 4:32

Bible Spam

Around 20 million of the 40 million verses shared on Twitter this year, as far as I can tell, came from Bible spam accounts–accounts that do nothing but tweet Bible verses all day (hundreds of times a day in some cases). I removed the most-prolific accounts from the above data, but undoubtedly it still contains tweets from many Bible spammers.