In a recent issue of Wired, Steven Levy writes about Narrative Science, a company that uses data to write automated news stories. Right now, they mostly deal in data-intensive fields like sports and finance, but the company is confident that it will easily expand into other areas—the company’s co-founder even predicts that an algorithm will win a Pulitzer Prize in the next five years.

In February 2012, I attended a session at the TOC Conference given in part by Kristian Hammond, the CTO and co-founder of Narrative Science. During the session, Hammond mentioned that sports stories have a limited number of angles (e.g., a “blowout win” or a “come-from-behind victory”)—you can probably sit down and think up a fairly comprehensive list in short order. Even in fictional sports stories, writers only use around sixty common tropes as part of the narrative. Once you have an angle (or your algorithm has decided on one), you just slot in the relevant data, add a little color commentary, and you have your story.

At the time, I was struggling to understand how automated content could apply to Bible study; Levy’s article leads me to think that robosermons, or sermons automatically generated by a computer program, are the way of the future.

Parts of a Robosermon

After all, from a data perspective, sermons don’t differ much from sports stories. In particular, they have three components:

After all, from a data perspective, sermons don’t differ much from sports stories. In particular, they have three components:

First, as with sports stories, sermons follow predictable structures and patterns. David Schmitt of Concordia Theological Seminary suggests a taxonomy of around thirty sermon structures. Even if this list isn’t comprehensive, it would probably take, at most, 100 to 200 structures to categorize nearly all sermons.

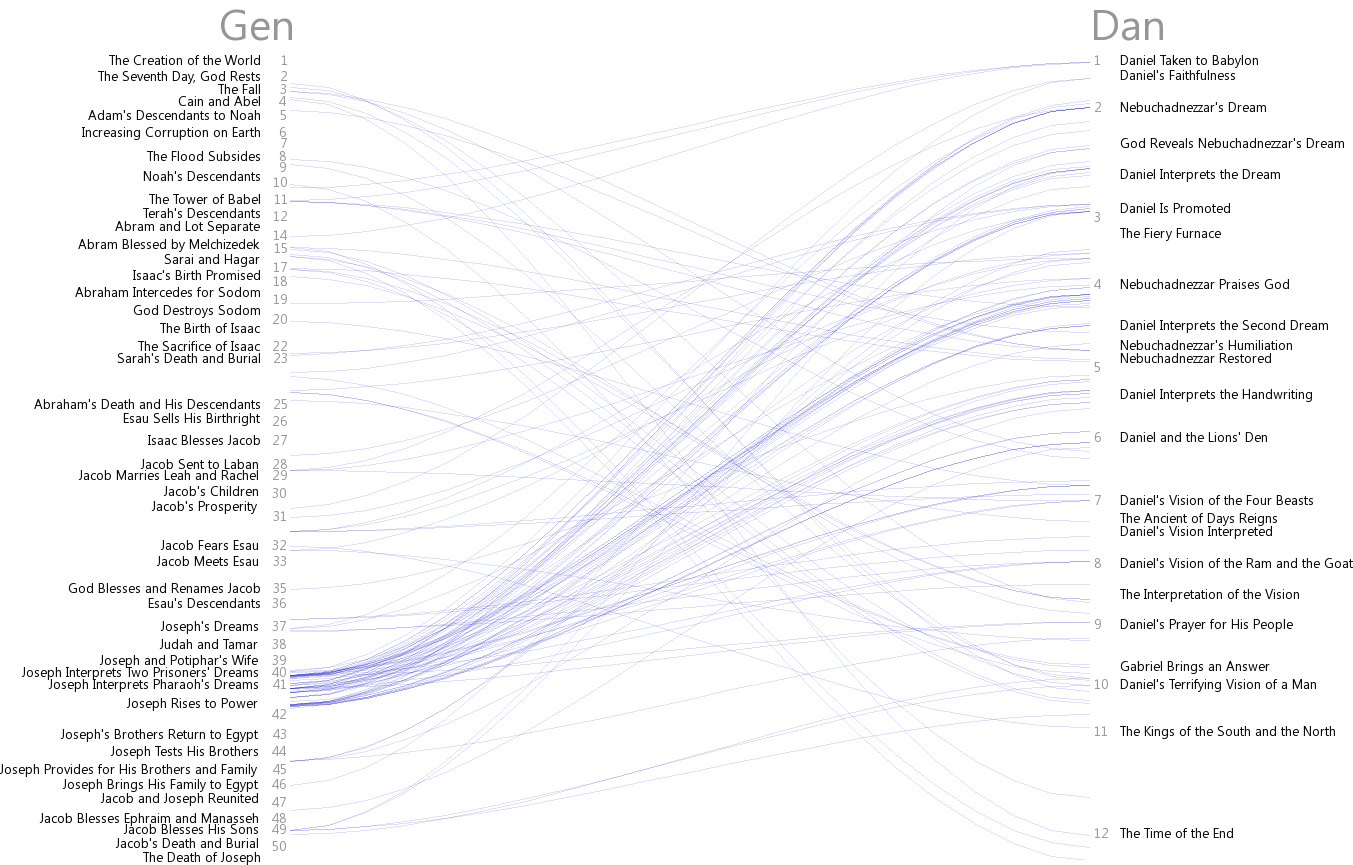

Second, sermons deal with predictable content: whereas sports have box scores, sermons have Bible texts and topics. A sermon will probably deal with a passage from the Bible in some way—the 31,000 verses in the Bible comprise a large but manageable set of source material (especially since most sermons involve a passage, not a single verse; you can probably cut this list down to around 2,000 sections). Topically, SermonCentral.com lists only 500 sermon topics in their database of 120,000 sermons. The power-law popularity distribution (i.e., the 80/20 rule) of verses preached on (on SermonCentral.com are 1,200 sermons on John 1 compared to seven on Numbers 35) and topics (1,400 sermons on “Jesus’ teachings” vs. four on “morning”) means that you can categorize most sermons using a small portion of the available possibilities.

Third, sermons generally involve illustrations or stories, much like the color commentary of sports stories. Finding raw material for illustrations shouldn’t present a problem to a computer program; a quick search on Amazon turns up 1,700 books on sermon illustrations and an additional 10,000 or so on general anecdotes. You can probably extract hundreds of thousands of illustrations from just these sources. Alternately, if a recent news story relates to your topic, the system can add the relevant parts to your sermon with little trouble (especially if a computer wrote the news story to begin with).

Application

You end up being able to say, “I want to preach a sermon on Philippians 2 that emphasizes Christ’s humility as a model for us.” Then—and here’s the part that doesn’t exist yet but that technology like Narrative Science’s will provide—an algorithm suggests, say, an amusing but poignant anecdote to start with, followed by three points of exegesis, exhortation, and application, and finishing with a trenchant conclusion. You tweak the content a bit, throwing in a shout-out to a behind-the-scenes parishioner who does a lot of work but rarely receives recognition, and call it done.

Why limit sermons to pastors, though? Why shouldn’t churchgoers be able to ask for custom sermons that fit exactly their circumstances? “I’d like a ten-part audio sermon series on Revelation from a dispensational perspective where each sermon exactly fits the length of my commute.” “Give me six weeks of premarital devotions for my boyfriend and me. I’ve always been a fan of Charles Spurgeon, so make it sound like he wrote them.”

Levy opens his Wired article with an anecdote about how grandparents would find articles about their grandchildren’s Little League games just as interesting as “anything on the sports pages.” He doesn’t mention that what they really want is a recap with their grandchild as the star (or at least as a strong supporting character—it’s like one of those children’s books where you customize the main character’s name and appearance). Robosermons let you tailor the sermon’s content so that your specific problems or questions form the central theme.

The logical end of this technology is a sermonbot that develops a following of eager listeners and readers, in the same way that an automated newspaper reporter would create fans on its way to winning a Pulitzer.

You may argue that robosermons diminish the role of the Holy Spirit in preparing sermons, or that they amount to plagiarism. I’m not inclined to disagree with you.

Conclusion

Building a robosermon system involves five components: (1) sermon structures; (2) Bible verses; (3) topics; (4) illustrations; and (5) technology like Narrative Science’s to put everything together coherently. It would also be helpful to have (6) a large set of existing sermons to serve as raw data. It’s a complicated problem but hardly an insurmountable one over the next ten years, should someone want to tackle it.

I’m not sure they should; that way lies robopologetics and robovangelism.

If you’re not an algorithm and you want to know how to prepare and deliver a sermon, I suggest listening to this 29-part course on preaching by Bryan Chapell at Biblical Training. It’s free and full of homiletic goodness.

After all, from a data perspective, sermons don’t differ much from sports stories. In particular, they have three components:

After all, from a data perspective, sermons don’t differ much from sports stories. In particular, they have three components: